Cheater's Gambit

- Category: Dev

- 200 points

- Solved by JCTF Team

Description

Solution

This writeup will have a straight forward solution, and an appendix getting into the intricacies of the debugging of the first version of this challenge, and the reasons of the bugs. Why? Because it's interesting (IMHO, of course), and even it's not strictly speaking InfoSec hacking, it is definitely hacking in the purest sense of the term.

Solve:

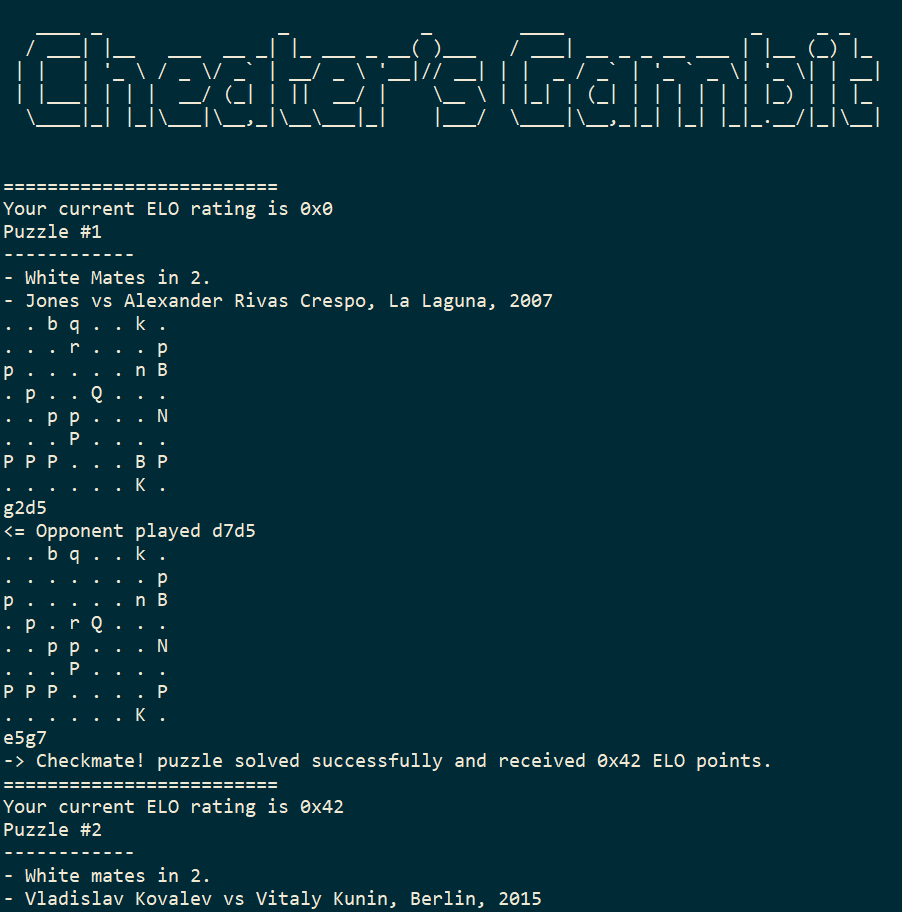

The challenge is about quickly solving a bunch of chess problems, leading to a mate. We don't only have to give the first move, but the whole variation. After the fix (see the appendix), if you find the move in 2 in time, you could even try your chance manually (you get only a couple of seconds); but that is very tough to solve 39 problems like that in a row.

So it's time to get help from the computer to mate the opponent. This time (only), it's not cheating!

The first step is to transform the ascii-board in a format acceptable by a chess engine. The natural choice is the Forsyth–Edwards Notation (FEN), a standard for describing chess positions. By simple text processing of the board, we get the first field of the FEN. The mate description gives us the second field (whose turn it is). We'll have to assume that no side can castle, and that there is no pending "en passant", so that we can complete to a valid FEN by adding "- - 0 1".

Now that we have the problem described in a workable way, we want to feed it to an engine. There are two main protocols used to communicate with an engine, CECP (a.k.a. XBoard protocol) and UCI. UCI returns the move in the format used in this challenge, so that will be our choice. A natural choice is the best engine these days, Stockfish. This may be not the right choice though for this kind of problems (see the appendix!), but we'll go with that for now. And as a bonus, there is even an easy-to-use interface library for python.

The flag itself is given byte by byte for each problem solved.

Based on all that, here is a winning script (using a Stockfish-based engine, but specialized for problem solving; read the appendix). Pay attention that it may not always work, for some reasons explained in the appendix below (after this 4th promo, you really have to read it).

#!/usr/bin/env python3

from pwn import *

from models import Stockfish

import sys

def parse_board_and_get_move(board,turn):

fen=""

count = 0

for c in board:

c=chr(c)

if c == ".":

count+=1

if count == 8:

fen+= str(count)

count = 0

else:

if count > 0:

fen+= str(count)

count = 0

fen+=str(c)

if count > 0:

fen+= str(count)

fen += " "+ turn + " - - 0 1"

log.info (fen)

stockfish.set_fen_position(fen)

# stockfish.set_depth(50)

best_move = stockfish.get_best_move()

print (best_move)

return (best_move)

stockfish = Stockfish("/usr/games/crystal")

flag=""

p = remote("cheatersgambit.ctf.bsidestlv.com", 3456)

while(1):

p.recvuntil(b"Your current ELO rating is")

print (p.recvuntil(b"------------\n"))

turn = "w"

turn_input = p.readline()

print (turn_input)

if "Black" in str(turn_input):

turn = "b"

p.readline()

board = b"/".join(p.recvlines(8)).replace(b" ",b"")

best_move = parse_board_and_get_move(board,turn)

p.sendline(best_move.encode(encoding='ascii'))

while(1):

answer = p.readline()

print (answer)

if b"Opponent played" in answer:

move = answer.decode(encoding='ascii').split(" ")[-1]

p.readlines(8)

stockfish.make_moves_from_current_position([best_move, move])

best_move = stockfish.get_best_move()

log.info (best_move)

p.sendline(best_move.encode(encoding='ascii'))

else:

break

if not b"Checkmate" in answer:

print (answer)

break

else:

flag += chr(int(answer.decode(encoding='ascii').split(" ")[7][2:],16))

if b"------------------------------------------------" in p.readline():

print ("flag: ", flag)

sys.exit()Flag: BSidesTLV2021{Queens_Gambit_Is_Awesome}

Appendix:

The challenge at first didn't work the way it was intended to. So I "debugged" it from a chess and chess engine perspective to understand why even with a good move, the challenge returned that another move was expected. After some discussion, the challenge was modified and mostly fixed (and simplified).

Symptoms:

Longer solutions:

1n3q2/p4p1Q/5p1b/2pP4/6Pk/5P2/1P1B1K1P/8 w - - 0 1

The question is about finding a mate in 3. The expected challenge "solution" is d2h6. But that leads only to a mate in 4. The mate in 3 starts with f2g2

- Kg2 Qg7 2. Be1+ Kg5 3. Qf5#

Other examples: rr3k2/pppq1pN1/1b1p1BnQ/1b2p1N1/4P3/2PP3P/PP3PP1/R4RK1 w - - 0 1

Mate in 3, but expected move (Bh7+) only leads to Mate in 4.

Wrong moves:

5brk/ppq2p1p/2p1rnp1/3p1NQ1/3P2RR/3B1P2/PPP2P1P/7K w - - 0 1

In this mate in 6, the right sequence starts with Qxf6+ (g5f6). But the server returns "Nope, best move was g4g3".

The problem here is that this move is plain wrong, since black can save the day (to a draw) after this poor white move:

- Rg3 h5 closing the black fortress.

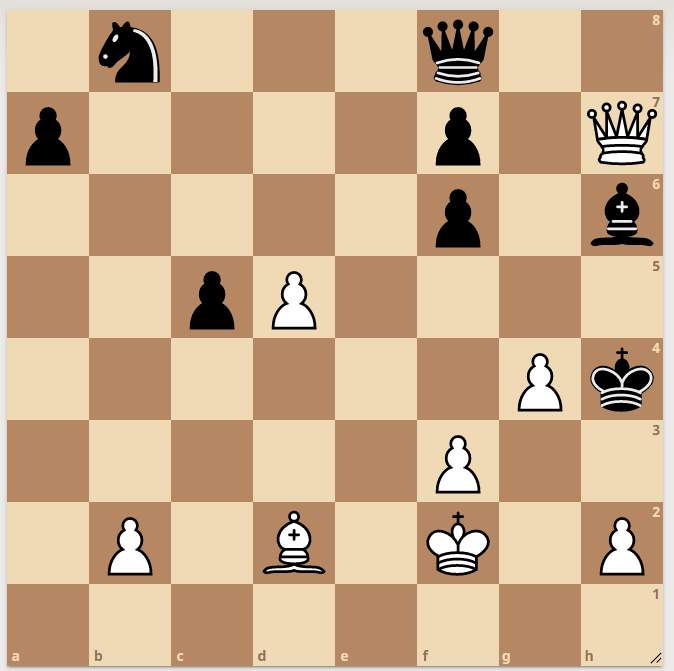

Dual solutions:

1B4k1/2Q2qpp/pp3b2/3b4/P2p4/3P4/1P4rP/4RR1K b - - 0 1

Here, the 3rd black move for mate in 5 can be Qh5+ or Rg3+. Both should be valid, but the server only accepts one of them!

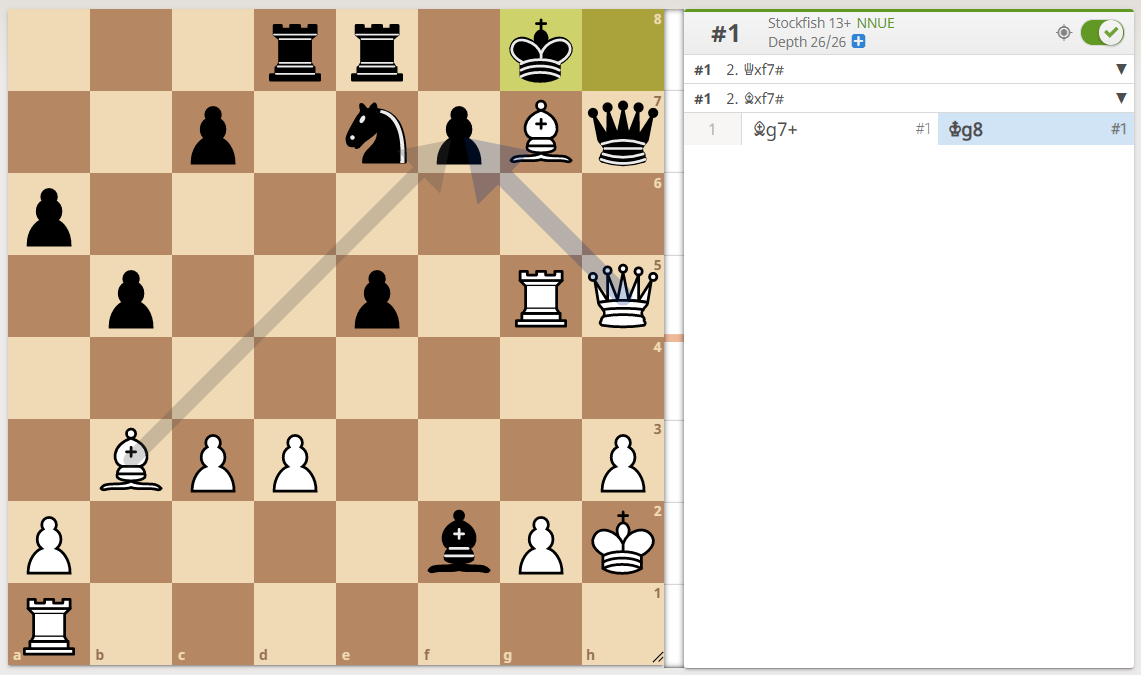

In 3rr2k/2p1np1q/p6B/1p2p1RQ/8/1BPP3P/P4bPK/R7 w - - 0 1, after 1. Bg7+ Kg8, Qxf7# and Bxf7# are both mates. But the server doesn't accept b3f7 (Bxf7)

A last example, in 4nk2/pp4rQ/4P2p/2pq3r/3p2pN/PP4P1/5P2/R3R1K1 w - - 0 1: after 1. Ng6+ Rxg6, there are 2 mates: e7# or Qf7#. The engine doesn't accept h7f7 (Qf7#).

The root cause(s)

So, what is the problem with the server engine? Well, there are actually several reasons here.

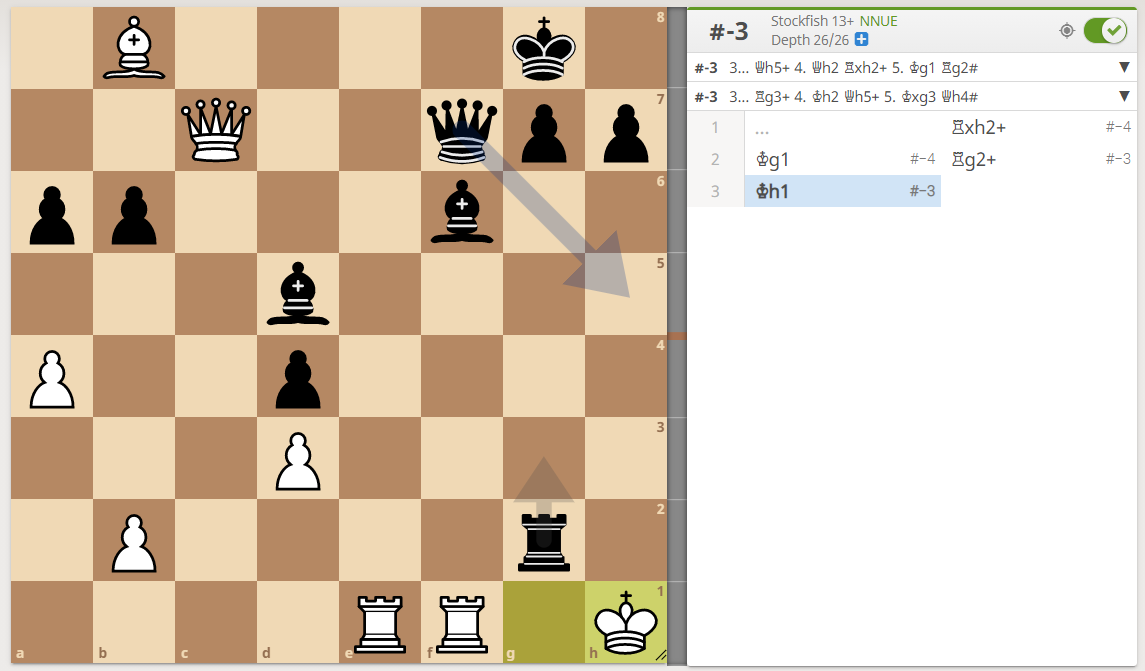

- Playing engine vs solving engine

The server was running a version of Stockfish configured to have a depth of 15 (half-moves). On the paper, that should have been enough, but not really.

Stockfish is arguably the best available chess engine. And in the last few years, its authors even added an hybrid model support using some neural network (NNUE), which made it even stronger.

But being the best at playing chess doesn't mean that it is good at chess problem solving. In fact, even in the human domain, these are different expertise. There are Grandmasters at chess problems, independently to the Chess Grandmaster title. BTW, only very few people were awarded both titles (e.g. the very gifted World Champion Dr GM GM John Nunn).

Playing engines must be efficient but can't allow themselves to do some exhaustive search of all the possibilities. In real game situations, the decision tree grows too fast for even the strongest computers with large memory, to be able to check all the branches. So, engines authors use some pruning strategy, "cutting" some of the branches while exploring the most promising ones.

For these reasons, it is more adequate to use some "chess solving engines", like popeye or chest, to find mate in X moves, in place of Stockfish. There are also some tweaked version of Stockfish that disabled the pruning to make is a "Mate Finder", like this "crystal" branch.

This was leading to different types of errors: in the case of some long mates, the engine may stop the computation, happy with its mate in 7, missing the mate in 6. In other cases, it worked some "good enough winning branch", but even completely missing the mate.

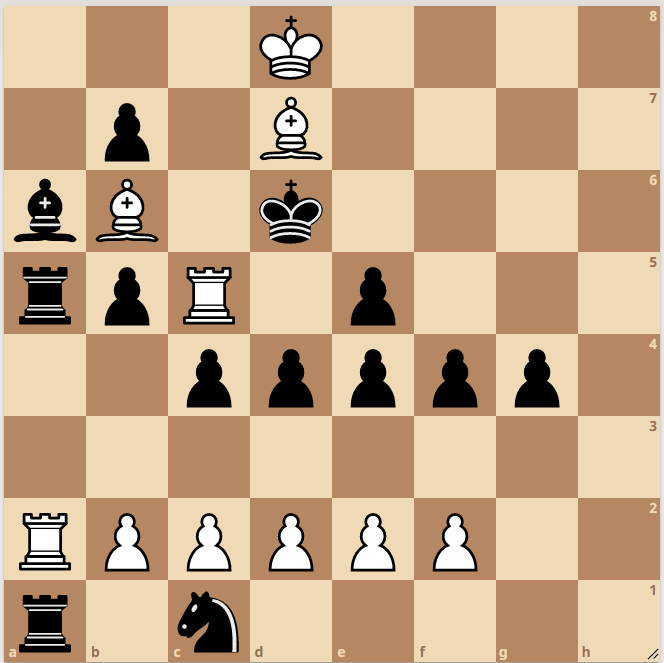

To illustrate this difference between types of engines, here is a nice example (not from the CTF): 3K4/1p1B4/bB1k4/rpR1p3/2ppppp1/8/RPPPPP2/r1n5 w - - 0 1

White plays and mate in 7. [ Try to solve it yourself. It's sometimes easier for a human than for a machine :-) ] Stockfish 14 is stuck at best with some mate in 12 after several minutes of computation, insisting on hitting first one of the black rooks. While Crystal 3.2 (the Stockfish-derived engine, optimized for problems solving) finds the mate in the matter of seconds.

And if you like this one, you will love this mate in 74 moves(!), based on multiple zugzwangs: k7/B3p1p1/PPp1P3/4P2b/p4p1P/P1p2p1K/2P2P2/7B w - - 0 1

- Too low resources given to the server engine

In addition to the previous point, we can't ignore the fact that the server engine must have been rather limited in regards of computation time or memory. This is the only reason we can think of for some really odd moves expected by the engine, especially on some long mates. This hypothesis was confirmed by the challenge author.

- Multiple winning moves

In chess composition, a problem shouldn't have multiple first moves (except if it's specifically notified). Since this challenge seems to have been based on some known database (we guess http://www.wtharvey.com/), all the original problems were sound. But it's possible and absolutely valid that there are multiple winning branches, even with the same amount of total moves. This should have been into account by the server, for example, by comparing the number of moves to the mate for the move proposed by the client.

The fix

It's not easy to fix such problems "in real time" during a short CTF. Another issue was that the author was limited by the computation capability of the server and couldn't consume too much resources.

So it's understandable that the author decided to fix the challenge during the competition by simply leaving only the mates in 2, removing all the longest mates. This circumvented most of the problems described above, except some cases of dual solutions on the second move. But at least, it was possible to get 39 problems in a row to get the flag. It's not working on every run, but at least it makes the challenge solvable.